接口

为了尽可能轻松地创建自定义链,我们实现了“可运行”协议。大多数组件都实现了 Runnable 协议。这是一个标准接口,可以轻松定义自定义链并以标准方式调用它们。标准接口包括

- stream: 流式响应块

- invoke: 输入调用链

- batch: 在输入列表上调用链

这些也有相应的异步方法:

- astream: 异步流式响应块

- ainvoke: 异步输入调用链

- abatch: 异步批量输入调用链

- astream_log: 除了最终响应之外,还实时流回发生的中间步骤

- astream_events: 链中发生的 beta 流事件(在 langchain-core 0.1.14 中引入)

输入类型和输出类型因组件而异:

| 组建 | 输入类型 | 输出类型 |

|---|---|---|

| Prompt | 字典 | PromptValue |

| ChatModel | 单个字符串、聊天消息列表或 PromptValue | ChatMessage |

| LLM | 单个字符串、聊天消息列表或 PromptValue | 字符 |

| OutputParser | LLM 或 ChatModel 的输出 | 依赖解析 |

| Retriever | 单个字符串 | 文档列表 |

| Tool | 单个字符串或字典,具体取决于工具 | 取决于工具 |

所有 Runnable 都公开输入和输出模式以检查输入和输出: - input_schema:从 Runnable 的结构自动生成的输入 Pydantic 模型 - output_schema:从 Runnable 的结构自动生成的输出 Pydantic 模型

我们来看看这些方法。��为此,我们将创建一个超级简单的 PromptTemplate + ChatModel 链。

%pip install –upgrade –quiet langchain-core langchain-community langchain-openai

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

model = ChatOpenAI()

prompt = ChatPromptTemplate.from_template("tell me a joke about {topic}")

chain = prompt | model

输入模式

Runnable 接受的输入的描述。这是从任何 Runnable 的结构动态生成的 Pydantic 模型。您可以对其调用 .schema() 来获取 JSONSchema 表示。

# The input schema of the chain is the input schema of its first part, the prompt.

chain.input_schema.schema()

{'title': 'PromptInput',

'type': 'object',

'properties': {'topic': {'title': 'Topic', 'type': 'string'}}}

prompt.input_schema.schema()

{'title': 'PromptInput',

'type': 'object',

'properties': {'topic': {'title': 'Topic', 'type': 'string'}}}

model.input_schema.schema()

{'title': 'ChatOpenAIInput',

'anyOf': [{'type': 'string'},

{'$ref': '#/definitions/StringPromptValue'},

{'$ref': '#/definitions/ChatPromptValueConcrete'},

{'type': 'array',

'items': {'anyOf': [{'$ref': '#/definitions/AIMessage'},

{'$ref': '#/definitions/HumanMessage'},

{'$ref': '#/definitions/ChatMessage'},

{'$ref': '#/definitions/SystemMessage'},

{'$ref': '#/definitions/FunctionMessage'},

{'$ref': '#/definitions/ToolMessage'}]}}],

'definitions': {'StringPromptValue': {'title': 'StringPromptValue',

'description': 'String prompt value.',

'type': 'object',

'properties': {'text': {'title': 'Text', 'type': 'string'},

'type': {'title': 'Type',

'default': 'StringPromptValue',

'enum': ['StringPromptValue'],

'type': 'string'}},

'required': ['text']},

'AIMessage': {'title': 'AIMessage',

'description': 'A Message from an AI.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'ai',

'enum': ['ai'],

'type': 'string'},

'example': {'title': 'Example', 'default': False, 'type': 'boolean'}},

'required': ['content']},

'HumanMessage': {'title': 'HumanMessage',

'description': 'A Message from a human.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'human',

'enum': ['human'],

'type': 'string'},

'example': {'title': 'Example', 'default': False, 'type': 'boolean'}},

'required': ['content']},

'ChatMessage': {'title': 'ChatMessage',

'description': 'A Message that can be assigned an arbitrary speaker (i.e. role).',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'chat',

'enum': ['chat'],

'type': 'string'},

'role': {'title': 'Role', 'type': 'string'}},

'required': ['content', 'role']},

'SystemMessage': {'title': 'SystemMessage',

'description': 'A Message for priming AI behavior, usually passed in as the first of a sequence\nof input messages.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'system',

'enum': ['system'],

'type': 'string'}},

'required': ['content']},

'FunctionMessage': {'title': 'FunctionMessage',

'description': 'A Message for passing the result of executing a function back to a model.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'function',

'enum': ['function'],

'type': 'string'},

'name': {'title': 'Name', 'type': 'string'}},

'required': ['content', 'name']},

'ToolMessage': {'title': 'ToolMessage',

'description': 'A Message for passing the result of executing a tool back to a model.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'tool',

'enum': ['tool'],

'type': 'string'},

'tool_call_id': {'title': 'Tool Call Id', 'type': 'string'}},

'required': ['content', 'tool_call_id']},

'ChatPromptValueConcrete': {'title': 'ChatPromptValueConcrete',

'description': 'Chat prompt value which explicitly lists out the message types it accepts.\nFor use in external schemas.',

'type': 'object',

'properties': {'messages': {'title': 'Messages',

'type': 'array',

'items': {'anyOf': [{'$ref': '#/definitions/AIMessage'},

{'$ref': '#/definitions/HumanMessage'},

{'$ref': '#/definitions/ChatMessage'},

{'$ref': '#/definitions/SystemMessage'},

{'$ref': '#/definitions/FunctionMessage'},

{'$ref': '#/definitions/ToolMessage'}]}},

'type': {'title': 'Type',

'default': 'ChatPromptValueConcrete',

'enum': ['ChatPromptValueConcrete'],

'type': 'string'}},

'required': ['messages']}}}

输出模式

对 Runnable 产生的输出的描述。这是从任何 Runnable 的结构动态生成的 Pydantic 模型。您可以对其调用 .schema() 来获取 JSONSchema 表示。

# The output schema of the chain is the output schema of its last part, in this case a ChatModel, which outputs a ChatMessage

chain.output_schema.schema()

{'title': 'ChatOpenAIOutput',

'anyOf': [{'$ref': '#/definitions/AIMessage'},

{'$ref': '#/definitions/HumanMessage'},

{'$ref': '#/definitions/ChatMessage'},

{'$ref': '#/definitions/SystemMessage'},

{'$ref': '#/definitions/FunctionMessage'},

{'$ref': '#/definitions/ToolMessage'}],

'definitions': {'AIMessage': {'title': 'AIMessage',

'description': 'A Message from an AI.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'ai',

'enum': ['ai'],

'type': 'string'},

'example': {'title': 'Example', 'default': False, 'type': 'boolean'}},

'required': ['content']},

'HumanMessage': {'title': 'HumanMessage',

'description': 'A Message from a human.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'human',

'enum': ['human'],

'type': 'string'},

'example': {'title': 'Example', 'default': False, 'type': 'boolean'}},

'required': ['content']},

'ChatMessage': {'title': 'ChatMessage',

'description': 'A Message that can be assigned an arbitrary speaker (i.e. role).',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'chat',

'enum': ['chat'],

'type': 'string'},

'role': {'title': 'Role', 'type': 'string'}},

'required': ['content', 'role']},

'SystemMessage': {'title': 'SystemMessage',

'description': 'A Message for priming AI behavior, usually passed in as the first of a sequence\nof input messages.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'system',

'enum': ['system'],

'type': 'string'}},

'required': ['content']},

'FunctionMessage': {'title': 'FunctionMessage',

'description': 'A Message for passing the result of executing a function back to a model.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'function',

'enum': ['function'],

'type': 'string'},

'name': {'title': 'Name', 'type': 'string'}},

'required': ['content', 'name']},

'ToolMessage': {'title': 'ToolMessage',

'description': 'A Message for passing the result of executing a tool back to a model.',

'type': 'object',

'properties': {'content': {'title': 'Content',

'anyOf': [{'type': 'string'},

{'type': 'array',

'items': {'anyOf': [{'type': 'string'}, {'type': 'object'}]}}]},

'additional_kwargs': {'title': 'Additional Kwargs', 'type': 'object'},

'type': {'title': 'Type',

'default': 'tool',

'enum': ['tool'],

'type': 'string'},

'tool_call_id': {'title': 'Tool Call Id', 'type': 'string'}},

'required': ['content', 'tool_call_id']}}}

Stream

for s in chain.stream({"topic": "bears"}):

print(s.content, end="", flush=True)

Sure, here's a bear-themed joke for you:

Why don't bears wear shoes?

Because they already have bear feet!

调用

chain.invoke({"topic": "bears"})

AIMessage(content="Why don't bears wear shoes? \n\nBecause they have bear feet!")

您可以使用 max_concurrency 参数设置并发请求数

chain.batch([{"topic": "bears"}, {"topic": "cats"}], config={"max_concurrency": 5})

[AIMessage(content="Why don't bears wear shoes?\n\nBecause they have bear feet!"),

AIMessage(content="Why don't cats play poker in the wild? Too many cheetahs!")]

异步流

async for s in chain.astream({"topic": "bears"}):

print(s.content, end="", flush=True)

AIMessage(content="Why don't bears ever wear shoes?\n\nBecause they already have bear feet!")

异步流事件 (beta)

事件流是一个测试版 API,可能会根据反馈进行一些更改。

注:langchain-core 0.2.0 中引入

目前,当使用 astream_events API 时,为了使一切正常工作,请:

- 在整个代码中使用异步(包括异步工具等)

- 如果定义自定义函数/可运行对象,则传播回调。

- 每当使用不带 LCEL 的可运行对象时,请确保在 LLM 上调用 .astream() 而不是 .ainvoke 以强制 LLM 流式传输令牌。

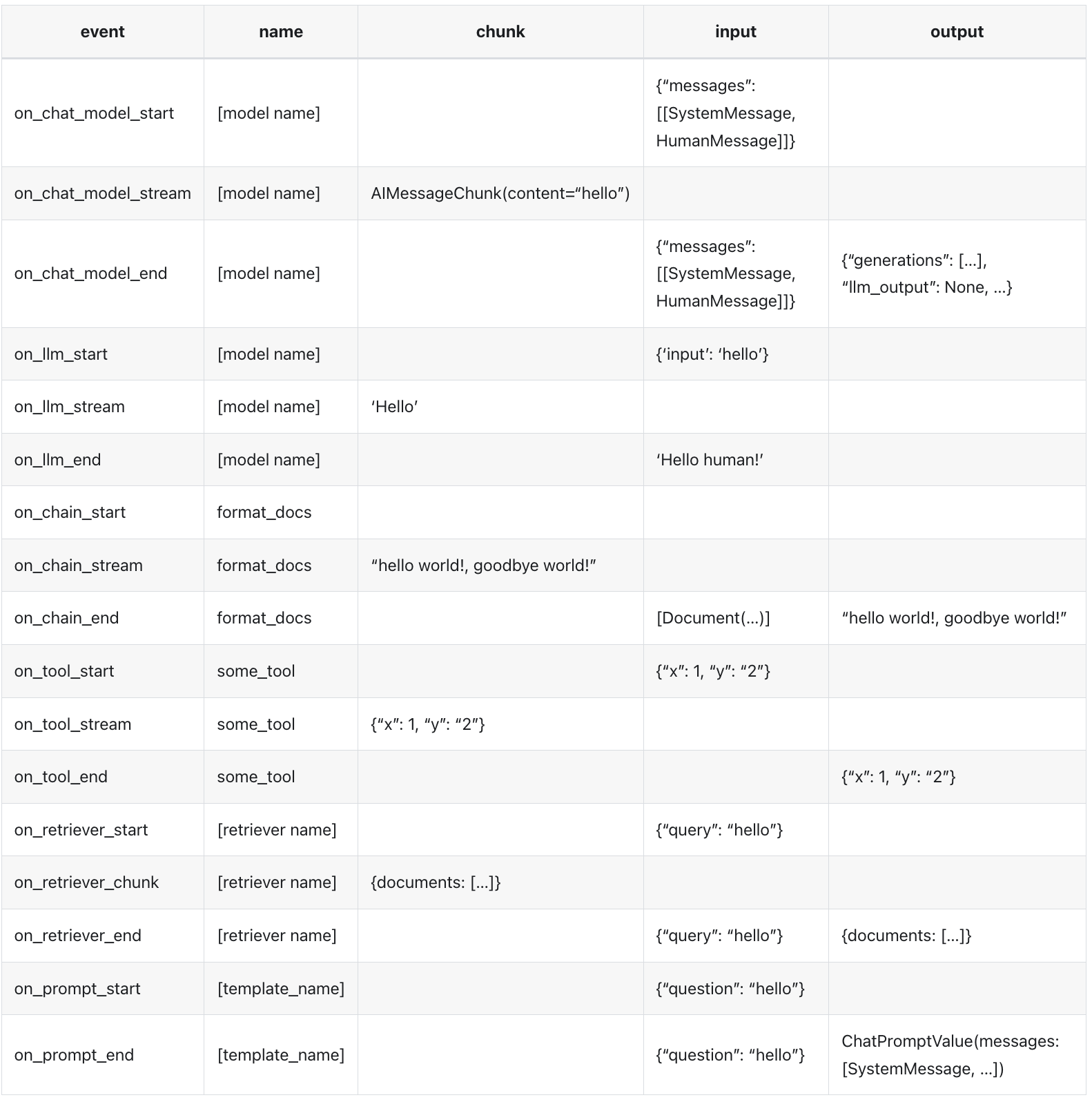

事件参考

下面是一个参考表,显示了各种 Runnable 对象可能发出的一些事件。一些 Runnable 的定义包含在表后面。

当流式传输时,在输入流完全消耗完之前,可运行的输入将不可用。这意味着输入将可用于相应的结束挂钩而不是开始事件。

以下是与上面显示的事件相关的声明:

format_docs:

def format_docs(docs: List[Document]) -> str:

'''Format the docs.'''

return ", ".join([doc.page_content for doc in docs])

format_docs = RunnableLambda(format_docs)

some_tool

@tool

def some_tool(x: int, y: str) -> dict:

'''Some_tool.'''

return {"x": x, "y": y}

prompt

template = ChatPromptTemplate.from_messages(

[("system", "You are Cat Agent 007"), ("human", "{question}")]

).with_config({"run_name": "my_template", "tags": ["my_template"]})

让我们定义一个新的链,以便更有趣地展示 astream_events 接口(以及后来的 astream_log 接口)。

from langchain_community.vectorstores import FAISS

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain_openai import OpenAIEmbeddings

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

vectorstore = FAISS.from_texts(

["harrison worked at kensho"], embedding=OpenAIEmbeddings()

)

retriever = vectorstore.as_retriever()

retrieval_chain = (

{

"context": retriever.with_config(run_name="Docs"),

"question": RunnablePassthrough(),

}

| prompt

| model.with_config(run_name="my_llm")

| StrOutputParser()

)

/home/eugene/src/langchain/libs/core/langchain_core/_api/beta_decorator.py:86: LangChainBetaWarning: This API is in beta and may change in the future.

warn_beta(

--

Retrieved the following documents:

[Document(page_content='harrison worked at kensho')]

Streaming LLM:

|H|arrison| worked| at| Kens|ho|.||

Done streaming LLM.

异步流中间步骤

所有可运行对象还有一个方法 .astream_log() ,用于流式传输(当它们发生时)链/序列的全部或部分中间步骤。

这对于向用户显示进度、使用中间结果或调试链非常有用。

您可以流式传输所有步骤�(默认)或按名称、标签或元数据包含/排除步骤。

此方法会生成 JSONPatch 操作,当按照与接收到的相同顺序应用该操作时,会构建 RunState。

class LogEntry(TypedDict):

id: str

"""ID of the sub-run."""

name: str

"""Name of the object being run."""

type: str

"""Type of the object being run, eg. prompt, chain, llm, etc."""

tags: List[str]

"""List of tags for the run."""

metadata: Dict[str, Any]

"""Key-value pairs of metadata for the run."""

start_time: str

"""ISO-8601 timestamp of when the run started."""

streamed_output_str: List[str]

"""List of LLM tokens streamed by this run, if applicable."""

final_output: Optional[Any]

"""Final output of this run.

Only available after the run has finished successfully."""

end_time: Optional[str]

"""ISO-8601 timestamp of when the run ended.

Only available after the run has finished."""

class RunState(TypedDict):

id: str

"""ID of the run."""

streamed_output: List[Any]

"""List of output chunks streamed by Runnable.stream()"""

final_output: Optional[Any]

"""Final output of the run, usually the result of aggregating (`+`) streamed_output.

Only available after the run has finished successfully."""

logs: Dict[str, LogEntry]

"""Map of run names to sub-runs. If filters were supplied, this list will

contain only the runs that matched the filters."""

流式传输 JSONPatch 块

这很有用,例如。在 HTTP 服务器中流式传输 JSONPatch,然后在客户端上应用操作以重建运行状态。请参��阅 LangServe 了解工具,以便更轻松地从任何 Runnable 构建 Web 服务器。

async for chunk in retrieval_chain.astream_log(

"where did harrison work?", include_names=["Docs"]

):

print("-" * 40)

print(chunk)

----------------------------------------

RunLogPatch({'op': 'replace',

'path': '',

'value': {'final_output': None,

'id': '82e9b4b1-3dd6-4732-8db9-90e79c4da48c',

'logs': {},

'name': 'RunnableSequence',

'streamed_output': [],

'type': 'chain'}})

----------------------------------------

RunLogPatch({'op': 'add',

'path': '/logs/Docs',

'value': {'end_time': None,

'final_output': None,

'id': '9206e94a-57bd-48ee-8c5e-fdd1c52a6da2',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:55.902+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}})

----------------------------------------

RunLogPatch({'op': 'add',

'path': '/logs/Docs/final_output',

'value': {'documents': [Document(page_content='harrison worked at kensho')]}},

{'op': 'add',

'path': '/logs/Docs/end_time',

'value': '2024-01-19T22:33:56.064+00:00'})

----------------------------------------

RunLogPatch({'op': 'add', 'path': '/streamed_output/-', 'value': ''},

{'op': 'replace', 'path': '/final_output', 'value': ''})

----------------------------------------

RunLogPatch({'op': 'add', 'path': '/streamed_output/-', 'value': 'H'},

{'op': 'replace', 'path': '/final_output', 'value': 'H'})

----------------------------------------

RunLogPatch({'op': 'add', 'path': '/streamed_output/-', 'value': 'arrison'},

{'op': 'replace', 'path': '/final_output', 'value': 'Harrison'})

----------------------------------------

RunLogPatch({'op': 'add', 'path': '/streamed_output/-', 'value': ' worked'},

{'op': 'replace', 'path': '/final_output', 'value': 'Harrison worked'})

----------------------------------------

RunLogPatch({'op': 'add', 'path': '/streamed_output/-', 'value': ' at'},

{'op': 'replace', 'path': '/final_output', 'value': 'Harrison worked at'})

----------------------------------------

RunLogPatch({'op': 'add', 'path': '/streamed_output/-', 'value': ' Kens'},

{'op': 'replace', 'path': '/final_output', 'value': 'Harrison worked at Kens'})

----------------------------------------

RunLogPatch({'op': 'add', 'path': '/streamed_output/-', 'value': 'ho'},

{'op': 'replace',

'path': '/final_output',

'value': 'Harrison worked at Kensho'})

----------------------------------------

RunLogPatch({'op': 'add', 'path': '/streamed_output/-', 'value': '.'},

{'op': 'replace',

'path': '/final_output',

'value': 'Harrison worked at Kensho.'})

----------------------------------------

RunLogPatch({'op': 'add', 'path': '/streamed_output/-', 'value': ''})

流式传输增量 RunState

您可以简单地传递 diff=False 来获取 RunState 的增量值。您会得到更多重复部分的更详细的输出。

async for chunk in retrieval_chain.astream_log(

"where did harrison work?", include_names=["Docs"], diff=False

):

print("-" * 70)

print(chunk)

----------------------------------------------------------------------

RunLog({'final_output': None,

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {},

'name': 'RunnableSequence',

'streamed_output': [],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': None,

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': None,

'final_output': None,

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': [],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': None,

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': [],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': '',

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': [''],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': 'H',

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': ['', 'H'],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': 'Harrison',

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': ['', 'H', 'arrison'],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': 'Harrison worked',

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': ['', 'H', 'arrison', ' worked'],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': 'Harrison worked at',

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': ['', 'H', 'arrison', ' worked', ' at'],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': 'Harrison worked at Kens',

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': ['', 'H', 'arrison', ' worked', ' at', ' Kens'],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': 'Harrison worked at Kensho',

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': ['', 'H', 'arrison', ' worked', ' at', ' Kens', 'ho'],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': 'Harrison worked at Kensho.',

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': ['', 'H', 'arrison', ' worked', ' at', ' Kens', 'ho', '.'],

'type': 'chain'})

----------------------------------------------------------------------

RunLog({'final_output': 'Harrison worked at Kensho.',

'id': '431d1c55-7c50-48ac-b3a2-2f5ba5f35172',

'logs': {'Docs': {'end_time': '2024-01-19T22:33:57.120+00:00',

'final_output': {'documents': [Document(page_content='harrison worked at kensho')]},

'id': '8de10b49-d6af-4cb7-a4e7-fbadf6efa01e',

'metadata': {},

'name': 'Docs',

'start_time': '2024-01-19T22:33:56.939+00:00',

'streamed_output': [],

'streamed_output_str': [],

'tags': ['map:key:context', 'FAISS', 'OpenAIEmbeddings'],

'type': 'retriever'}},

'name': 'RunnableSequence',

'streamed_output': ['',

'H',

'arrison',

' worked',

' at',

' Kens',

'ho',

'.',

''],

'type': 'chain'})

并行性

我们来看看LangChain表达式语言是如何支持并行请求的。例如,当使用 RunnableParallel(通常写为字典)时,它会并行执行每个元素。

from langchain_core.runnables import RunnableParallel

chain1 = ChatPromptTemplate.from_template("tell me a joke about {topic}") | model

chain2 = (

ChatPromptTemplate.from_template("write a short (2 line) poem about {topic}")

| model

)

combined = RunnableParallel(joke=chain1, poem=chain2)

%%time

chain1.invoke({"topic": "bears"})

CPU times: user 18 ms, sys: 1.27 ms, total: 19.3 ms

Wall time: 692 ms

AIMessage(content="Why don't bears wear shoes?\n\nBecause they already have bear feet!")

%%time

chain2.invoke({"topic": "bears"})

CPU times: user 10.5 ms, sys: 166 µs, total: 10.7 ms

Wall time: 579 ms

AIMessage(content="In forest's embrace,\nMajestic bears pace.")

%%time

combined.invoke({"topic": "bears"})

CPU times: user 32 ms, sys: 2.59 ms, total: 34.6 ms

Wall time: 816 ms

{'joke': AIMessage(content="Sure, here's a bear-related joke for you:\n\nWhy did the bear bring a ladder to the bar?\n\nBecause he heard the drinks were on the house!"),

'poem': AIMessage(content="In wilderness they roam,\nMajestic strength, nature's throne.")}

批次并行性

并行性可以与其他可运行对象相结合。让我们尝试使用批处理并行性。

%%time

chain1.batch([{"topic": "bears"}, {"topic": "cats"}])

CPU times: user 17.3 ms, sys: 4.84 ms, total: 22.2 ms

Wall time: 628 ms

[AIMessage(content="Why don't bears wear shoes?\n\nBecause they have bear feet!"),

AIMessage(content="Why don't cats play poker in the wild?\n\nToo many cheetahs!")]

%%time

chain2.batch([{"topic": "bears"}, {"topic": "cats"}])

CPU times: user 15.8 ms, sys: 3.83 ms, total: 19.7 ms

Wall time: 718 ms

[AIMessage(content='In the wild, bears roam,\nMajestic guardians of ancient home.'),

AIMessage(content='Whiskers grace, eyes gleam,\nCats dance through the moonbeam.')]

%%time

combined.batch([{"topic": "bears"}, {"topic": "cats"}])

CPU times: user 44.8 ms, sys: 3.17 ms, total: 48 ms

Wall time: 721 ms

[{'joke': AIMessage(content="Sure, here's a bear joke for you:\n\nWhy don't bears wear shoes?\n\nBecause they have bear feet!"),

'poem': AIMessage(content="Majestic bears roam,\nNature's strength, beauty shown.")},

{'joke': AIMessage(content="Why don't cats play poker in the wild?\n\nToo many cheetahs!"),

'poem': AIMessage(content="Whiskers dance, eyes aglow,\nCats embrace the night's gentle flow.")}]